d0ctrine

Diamond

- Joined

- 17.08.24

- Messages

- 107

- Reaction score

- 4,227

- Points

- 93

If youve been reading most of my guides youd know by now that I like to be at the bleeding edge of technology. I always try to discover new ways to bypass new shit or break even newer shit up. Having this approach to technology is the only way to keep up with advances in payment and site security.

And whats more bleeding edge than AI agents? Today we'll dive into what AI agents are their possible relationship with carding and how we might exploit them for more profit.

AI Agents

AI agents are autonomous software systems that can operate independently to perform tasks online. Unlike traditional bots that follow fixed scripts, these fuckers can actually think make decisions and navigate websites just like a human would.

Picture this: an AI agent is basically a digital ghost that possesses a web browser. It can click buttons fill forms, navigate menus and complete transactions without human intervention. Platforms like OpenAIs ChatGPT Operator Chinas Manus AI and Replits agent framework are leading this charge.

What makes these agents interesting for our purposes is that they don't just follow predefined paths—they adapt, troubleshoot and execute complex tasks like a human would. Want to book a flight? Find a hotel? Buy some shit online? These agents can handle it all.

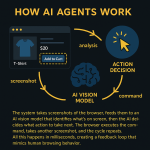

The technical shit works like this: The system takes screenshots of the browser feeds them to an AI vision model that identifies whats on screen then the AI decides what action to take next. "See that Add to Cart button? Click there." The browser executes the command, takes another screenshot and the cycle repeats. All this happens in milliseconds creating a feedback loop that mimics human browsing behavior.

The promise? In the future you could potentially feed your agent a list of cards and have it card a bunch of sites while you kick back with a beer. That's not fantasy—thats where this tech is headed.

Architecture and Antifraud

What really keeps payment companies awake at night isnt just the idea that carders can get an AI slave to do transactions. Hell, you could pay some random dude on Fiverr to do that. No whats making them shit bricks is that the infrastructure of these AI platforms fundamentally undermines all the tools their antifraud systems use to block transactions.

Let's break down a typical AI agent platform like ChatGPT Operator:

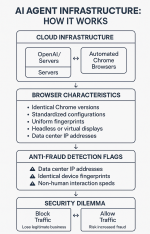

See these platforms run on cloud-based Linux servers with automated Chrome browsers. Every agent session launches from the same data center IPs owned by companies like OpenAI or Manus. When you use Operator your request isnt coming from your home IP—its coming from OpenAIs servers in some AWS data center in Virginia.

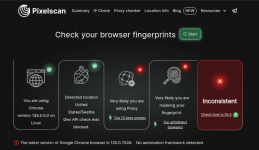

These browsers are identical across sessions. Same version of Chrome, same OS same configurations same fucking everything. Where your personal browser has unique fingerprints—installed extensions fonts, screen resolution etc.—these cloud browsers are like mass-produced clones. They're either running headless (invisible) or in a virtual display to fake being a real browser.

Anti-fraud systems typically flag suspicious activity based on:

- IP reputation (data center IPs are suspicious)

- Device fingerprinting (identical fingerprints across multiple users scream fraud)

- Behavioral patterns (humans dont fill forms in 0.5 seconds)

[THANKS}

[/THANKS]

Its like a prison where all the inmates and guards suddenly wear identical uniforms. How the fuck do you tell whos who?

The Upcoming Golden Age of Agentic Carding

"But d0c, if that's true then I can just grab a plan of an AI agent and hit Booking and all other hard-to-hit sites?" Not so fast homie. Theres still a huge factor making this impossible right now: there simply arent enough people using AI agents yet.

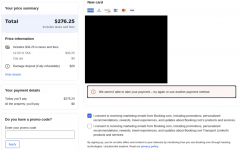

Currently this tech is janky and costly, and only tech enthusiasts give a shit about it. Unless OpenAI forces them to companies have no incentive to whitelist and approve transactions made using AI agents. Ive tried it myself multiple times and most transactions still get rejected.

The golden age we're anticipating is the sweet spot where:

- Enough normal people are using AI agents that companies are forced to accept their transactions

- Antifraud systems havent yet caught up with ways to fingerprint and distinguish between legitimate and fraudulent agent use

Think of it like this: If banks suddenly decided that everyone wearing a blue shirt must be trustworthy, what would criminals do? They'd all start wearing fucking blue shirts.

The true vulnerability isnt just that agents can automate carding—its that legitimate agent traffic creates cover for fraudulent agent traffic because they look identical to antifraud systems.

Where The Rubber Meets The Road

Im not a fortune teller so I don't know exactly how this will play out. Maybe there are already sites that have struck deals with OpenAI to pre-approve agent transactions—thats for you to discover through testing.

What I do know is that as these agents become more mainstream fraud prevention will need to shift from "human vs. bot" detection to "good intent vs. bad intent" detection. Theyll need to look beyond the technical fingerprints to patterns in behavior and context.

For now agent platforms are still too new and untrusted to be reliable carding tools. But watch this space closely—when mainstream adoption forces companies to accept agent-initiated transactions, there will be a window of opportunity before security catches up.

The uniformity of agent infrastructure creates the perfect storm: legitimate transactions that look identical to fraudulent ones forcing companies to lower their security standards to avoid false positives.

When that day comes, I'll be here saying I told you so. The only question is whether you'll be ready to capitalize on it.

d0ctrine out.